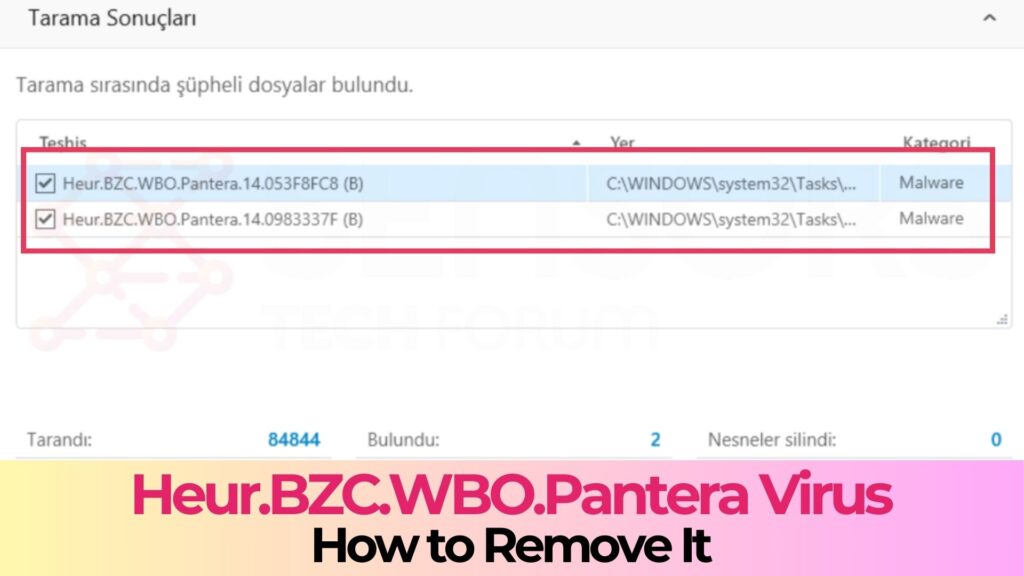

Heur.BZC.ONG.Pantera is a Heuristic detection. This means that the anti-virus software that has detected it cannot find exact information about this elusive Trojan, and this is why it detects it based on the behaviour of a Trojan, called Pantera.

Heur.BZC.ONG.Pantera Virus Detection - How to Remove It

Heur.BZC.ONG.Pantera is the name of a dangerous form of malware, that may cause a lot of problems due to redirects and malware.sensorstechforum.com

Hot Take Kaspersky and various other AVs can't detect simple ransomware script

- Thread starter Ahmed Uchiha

- Start date

- Featured

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Consumers do it all the time. Not to mention enterprise and government SysAdmin who do the same without even reviewing the code.I also don't see any reason why a user would go around, download scripts and run them.

Threat actors know this, and that is why they poison GitHub, PyPI, other repos, PowerShell Gallery - anywhere that they can upload malicious scripts.

@Parkinsond

Bitdefender B-HAVE executes the file in its cloud virtual sandbox. IIRC it is in the cloud; not local execution. B-HAVE engine "detection" uses the Heur.BZG label.

Bitdefender B-HAVE executes the file in its cloud virtual sandbox. IIRC it is in the cloud; not local execution. B-HAVE engine "detection" uses the Heur.BZG label.

Just a correction, B-HAVE is local in memory, not cloud. But the results of the captured behaviour could be double checked with the cloud when certain local heuristics (silent detections) occur.@Parkinsond

Bitdefender B-HAVE executes the file in its cloud virtual sandbox. IIRC it is in the cloud; not local execution. B-HAVE engine "detection" uses the Heur.BZG label.

My point is, if the malicious payload / dropped file never detonates (for whatever reason), does that mean the original dropper (executable or script) is no longer considered malware?That’s a very wide subject. Typically, malware aims to be a self contained project, relying on dependancies makes it fragile and adds more ifs and buts to an already huge list of them. Malware will either come in an archive with all dependancies, or it will rely on tools and utilities already provided by the OS. Sandboxes typically provide the dependancies.

When the website hosting a payload is down, the payload is still malicious. But this script is not a payload, if you define every 3 lines of code that cause mere inconvenience as malware, then you may as well stop paying for your broadband and disconnect.

It is not even a malware artefact, it’s something someone designed for a purpose.

There are many files like this.

It is still considered malware.My point is, if the malicious payload / dropped file never detonates (for whatever reason), does that mean the original dropper (executable or script) is no longer considered malware?

Thank you for technical clarification.Just a correction, B-HAVE is local in memory, not cloud. But the results of the captured behaviour could be double checked with the cloud when certain local heuristics (silent detections) occur.

The thing about detection or determination labels in AVs, they're not always correct for how and why the file was detected. A detection or block label is just one piece of the puzzle.

I recall seeing "BZG" a lot in Gravity Zone products.

Yeah, the sandbox analysis (as in cloud detonation) is only in the business products, and not even all of them. The thing is, you can expect once something is submitted through the business products, some reputation-based detections can occur and they can carry the name of the heuristic that triggered the detection. Whether it’s reputation or local heuristic that got triggered can be verified if the wi-fi connection is turned off, just before saving the script.Thank you for technical clarification.

The thing about detection or determination labels in AVs, they're not always correct for how and why the file was detected. A detection or block label is just one piece of the puzzle.

I recall seeing "BZG" a lot in Gravity Zone products.

I bet this is a fully local detection. BD probably created loads of specific profiles against ransomware and then went and created profiles for individual actions which vaguely indicate ransomware, such as foul play with file extensions.

I concur... it is still malware even though it is harmless if the payload does not detonate (for whatever reason)It is still considered malware.

Spill the beans, what’s your beef with detonation, you keep mentioning thatI concur... it is still malware even though it is harmless if the payload does not detonate (for whatever reason).

Detonations are super cool, but not if they do not happenSpill the beans, what’s your beef with detonation, you keep mentioning that

Don't get me wrong, sandboxes are great, my products even execute samples in a sandbox during analysis, and we can add whatever additional sandbox features we want or need.

As we all know, security is about layers, and there is not one single malware analysis method that is going to detect every single malware.

So I am a fan of sandboxes, and I look forward to the day that someone gets it right

But sandboxes usually use static analysis too… Like in the case of Check Point, there are all sorts of engines there, including CP’s own engine, Bitdefender signatures, static analysis, analysis of PDF files (including the links in them), analysis of the pdf/document content and so on. The sandbox follows all the links, downloads all files, analyses shortcuts, dll to exe relationships and many others. It is even CPU-reinforced, evasions are detected at CPU level. It also simulates user activity, moves the mouse, presses buttons such as next, detects DGA by obtaining all domains malware uses and more. The sandbox consists of over 70 engines, many of them based on deep learning.Detonations are super cool, but not if they do not happen.

Don't get me wrong, sandboxes are great, my products even execute samples in a sandbox during analysis, and we can add whatever additional sandbox features we want or need.

As we all know, security is about layers, and there is not one single malware analysis method that is going to detect every single malware.

So I am a fan of sandboxes, and I look forward to the day that someone gets it right. It will happen... especially with generative AI.

That’s not to say it is infallible, nothing ever is. But the successful detection rate is very high.

Or if it’s my orion project that you are discussing (because I am not sure how the subject of detonation came up and why), Orion has got nothing to do with Sirius or with the sandboxes from major vendors. Orion works as interpreter for the VirusTotal results, which includes the behavioural reports.

I created a multi-layer heuristic system. The first layer converts the report to actions and the second layer combines actions into behavioural profiles. It serves as eyes and years for data which otherwise no one understands or even looks at.

I created this heuristic interpreter to save users’ Gemini input/output tokens.

There is additional intelligence that I am now integrating.

Last edited:

Is BZG short for BaZanG? Forgive me I'm only Hooman. Hehe.Thank you for technical clarification.

The thing about detection or determination labels in AVs, they're not always correct for how and why the file was detected. A detection or block label is just one piece of the puzzle.

I recall seeing "BZG" a lot in Gravity Zone products.

Cheers guys!

Thank you for technical clarification.

The thing about detection or determination labels in AVs, they're not always correct for how and why the file was detected. A detection or block label is just one piece of the puzzle.

I recall seeing "BZG" a lot in Gravity Zone products.

The naming convention in Antivirus are thru Malware Family (zeus,conficker etc)

If if falls outside of that, it's by category ( hack tool,pup etc ) or by heuristics ( generic,artemis etc )

If none of that came true, a new name will be created.

Many times a detection name can contain internal names of technologies, which is the case with Trend Micro for example, VSX (Virtual Security Extension) is the equivalent of BHAVE, TRX (Trend Micro X-Gen is their cloud AI). So Trojan.VSX.TRX.<model identifier> merely serves as debug information for Trend Micro, it points them to the machine learning models that deal with local emulation.The naming convention in Antivirus are thru Malware Family (zeus,conficker etc)

If if falls outside of that, it's by category ( hack tool,pup etc ) or by heuristics ( generic,artemis etc )

If none of that came true, a new name will be created.

Such is the case with McAfee Real Protect-LS (local static) and an exclamation mark serves to denote “weak classifier” which could be generic detection or AI-based.

There are many others like that (CP Gen.SB.file format, Gen.SA.file format), Kaspersky UDS, Dr. Web sniggen, Symantec SONAR.something, Bloodhound.something (detecting exploits), Trojan.Gen.NPE (first spotted by Power Eraser), Avast EVO (technology is called EVO-Gen) and so on.

In this case Panthera might as well be some internal Bitdefender naming for a set of heuristics that deal specially with data encryption for impact, data destruction and so on.

Last edited:

Yes,for DEBUG purposes. This is useful for the Tech Support/AV Labs to pinpoint what's triggering the detection. Together with the Trend Micro SIC tool ( System Information Collector ), TM will have a better idea of what's happening in the infected system.Many times a detection name can contain internal names of technologies, which is the case with Trend Micro for example, VSX (Virtual Security Extension) is the equivalent of BHAVE, TRX (Trend Micro X-Gen is their cloud AI). So Trojan.VSX.TRX.<model identifier> merely serves as debug information for Trend Micro, it points them to the machine learning models that deal with local emulation.

Such is the case with McAfee Real Protect-LS (local static) and an exclamation mark serves to denote “weak classifier” which could be generic detection or AI-based.

There are many others like that (CP Gen.SB.file format, Gen.SA.file format), Kaspersky UDS, Dr. Web sniggen, Symantec SONAR.something, Bloodhound.something (detecting exploits), Trojan.Gen.NPE (first spotted by Power Eraser), Avast EVO (technology is called EVO-Gen) and so on.

In this case Panthera might as well be some internal Bitdefender naming for a set of heuristics that deal specially with data encryption for impact, data destruction and so on.

Oh, I wasn't referring to any product specifically, especially Orion, sorry if you thought that was the case. We started talking about sandboxes on the previous page so the conversation drifted a little, so that led to detonation.But sandboxes usually use static analysis too… Like in the case of Check Point, there are all sorts of engines there, including CP’s own engine, Bitdefender signatures, static analysis, analysis of PDF files (including the links in them), analysis of the pdf/document content and so on. The sandbox follows all the links, downloads all files, analyses shortcuts, dll to exe relationships and many others. It is even CPU-reinforced, evasions are detected at CPU level. It also simulates user activity, moves the mouse, presses buttons such as next, detects DGA by obtaining all domains malware uses and more. The sandbox consists of over 70 engines, many of them based on deep learning.

That’s not to say it is infallible, nothing ever is. But the successful detection rate is very high.

Or if it’s my orion project that you are discussing (because I am not sure how the subject of detonation came up and why), Orion has got nothing to do with Sirius or with the sandboxes from major vendors. Orion works as interpreter for the VirusTotal results, which includes the behavioural reports.

I created a multi-layer heuristic system. The first layer converts the report to actions and the second layer combines actions into behavioural profiles. It serves as eyes and years for data which otherwise no one understands or even looks at.

I created this heuristic interpreter to save users’ Gemini input/output tokens.

There is additional intelligence that I am now integrating.

To sum up, no product is perfect, sandboxes are cool and they are only going to get better with generative AI.

So the bigger debate should be Bitdefender false flagging it.McAfee and ZoneAlarm Threat Emulation conclude the script is clean. So does my upcoming Orion engine.

I just upload the source code to chat gpt but, still shows it's risky.So any time you see any kind of coding in any script ps1 or bat and so forth, you consider them malicious ? It may sound harsh, but you better learn to program, sit in a computer science 101 at a university. You don't need to pass the entrance test, just get the class schedule to find the classroom and go sit down. Most professors allow that. Malware detection Requires one to understand code a little bit.

Short answer: risky — unless you run it only in a controlled test folder after doing a dry-run and a backup.

Why I call it risky

- It blindly renames every matched entry

*.*in the current directory. That can include important files (documents, executables, config files) and may produce unwanted.txtsuffixes. - Files already ending in

.txtwill become.txt.txt. - Name collisions: if

foo.doc→foo.doc.txtbutfoo.doc.txtalready exists,renwill fail or cause unexpected behavior. - The batch file itself (or other scripts) can be targeted and renamed while running — fragile and confusing.

- No checks for read-only or system/hidden attributes, no logging, no dry-run.

- Could break other programs that expect original filenames (software, scripts, indexes, backups).

- Permissions or antivirus may stop or partially complete the job, leaving an inconsistent state.

- You run it in an empty or disposable test folder (e.g., created only for this task).

- You run a dry-run first and verify the results.

- You have a backup or versioned copy of the files.

.txt, skips the script itself, avoids overwriting existing targets, logs)

Code:

@echo off

setlocal enabledelayedexpansion

set "LOG=rename_log.txt"

echo Rename run at %DATE% %TIME% > "%LOG%"

rem Dry-run mode: change DRYRUN=1 to DRYRUN=0 to actually perform renames

set "DRYRUN=1"

for %%F in (*.*) do (

rem skip directories (if they have a trailing \ check)

if not exist "%%~fF\" (

rem skip files already .txt

if /I not "%%~xF"==".txt" (

rem skip the script itself

if /I not "%%~nxF"=="%~nx0" (

set "src=%%F"

set "dst=%%~nF%%~xF.txt"

if exist "!dst!" (

echo SKIP (target exists): "%%F" -> "!dst!" >> "%LOG%"

echo Skipping "%%F" (target exists)

) else (

echo Planned: ren "%%F" "!dst!" >> "%LOG%"

if "!DRYRUN!"=="0" ren "%%F" "!dst!"

)

) else (

echo SKIP (script): "%%F" >> "%LOG%"

)

) else (

echo SKIP (.txt already): "%%F" >> "%LOG%"

)

)

)

endlocal- Start with

DRYRUN=1to print/log planned actions; set to0only after you verify the log. - Run in the folder where you want the action and not in system folders.

Code:

Get-ChildItem -File | Where-Object { $_.Extension -ne '.txt' -and $_.Name -ne $MyInvocation.MyCommand.Name } |

ForEach-Object {

$new = $_.Name + '.txt'

if (-not (Test-Path $new)) {

Write-Output "Rename: $($_.Name) -> $new"

# Rename-Item -LiteralPath $_.FullName -NewName $new # remove comment to apply

} else {

Write-Output "Skip (exists): $new"

}

}- Use

-WhatIfor comment outRename-Itemwhile testing.

- Work in a test folder first.

- Run a dry-run and inspect the planned changes (log).

- Make a backup or snapshot.

- Exclude

.txt, the script itself, and any important system folders. - Ensure you understand how name collisions will be handled.

- If many files, test a small subset first.

1) Simple example — how names change (expected, immediate problem)

Start with this folder contents (example):

Code:

report.doc

photo.jpg

notes.txt

program.exe

script.bat

archive.tar.gz

README

Code:

for %%f in (*.*) do (

ren "%%f" "%%~nxf.txt"

)%%~nxf expands to the original name + extension, so the renames attempted are:report.doc→report.doc.txt(probably fine)photo.jpg→photo.jpg.txtnotes.txt→notes.txt.txt(now doubled)program.exe→program.exe.txt(no longer executable)script.bat→script.bat.txt(the batch file itself matches and will be targeted)archive.tar.gz→archive.tar.gz.txtREADME— no dot: does not match*.*, so it is skipped

.exe and .bat now have .txt appended — that immediately breaks executability and scripts that expect those names.2) Name-collision example (actual failure)

If a file with the target name already exists, the rename will fail for that file.Start with:

Code:

foo.doc

foo.doc.txt <-- already exists

Code:

ren "foo.doc" "foo.doc.txt"foo.doc remains unchanged while the user expected it to be renamed. If your script runs on hundreds of files, you get a chaotic partial state (some renamed, some failed) — inconsistent file set.3) Breakage of programs / services (real-world consequence)

If you run the script in a folder that contains application files:program.exe→program.exe.txt: program will not run (double-click tries to open it as text).lib.dll→lib.dll.txt: dependent applications will fail to load that DLL and may crash.config.json→config.json.txt: services expectingconfig.jsonwill not find it and may fail to start or revert to defaults.- Scheduled tasks/scripts that reference exact filenames will break.

4) The batch file itself can be targeted (confusing/unreliable)

Your script uses*.* so if the batch file (say rename.bat) lives in the same folder it will be included and an attempt will be made to rename it to rename.bat.txt. Even if renaming an executing script is allowed on some systems, this leads to confusing states: the script’s filename changed on disk while it was running; future calls to the same filename will fail; maintenance becomes harder. It’s poor practice and can produce unexpected behavior.Note: OS behavior about renaming an open/running file can vary; whether Windows allows renaming an executing batch file is environment-dependent. Regardless, trying to rename your running script is undesirable and risky.

5) Hidden/system files, backups, and permission errors

- If the directory contains read-only, system, or in-use files, some

renoperations will fail, producing a mixture of successful and failed renames. - Antivirus or policy software may block or quarantine files mid-run, again leaving the folder inconsistent.

- If you run the script in a system folder (or a folder used by backups, version control, or services), you can corrupt backups or break those services.

6) Demonstration you can run safely (dry-run)

Here’s a safe dry-run test you can run in a disposable folder (create a test folder first):Batch dry-run simulation (do not run the real ren command — just show planned actions):

Code:

@echo off

echo Dry-run: planned renames

for %%f in (*.*) do (

echo ren "%%f" "%%~nxf.txt"

)ren command the script would execute — inspect the output and you’ll see all the problem cases (e.g., notes.txt → notes.txt.txt, program.exe → program.exe.txt, and script.bat → script.bat.txt).7) Summary — short, provable reasons it's unsafe

- Unselective: It renames every

*.*, including.exe,.dll,.bat,.sys-like names — which breaks executability and program behavior. (You can prove this by creating an.exefile and observing it becomes non-executable.) - Doubles

.txt: Files already.txtbecome*.txt.txt, an obvious unwanted change you can reproduce instantly. - Collisions: If

name.ext.txtalready exists, the rename fails — you get partial/uneven results that are hard to recover from. - Self-targeting: The script can rename itself — this is demonstrable by running it in the same folder as the script and observing the file name change or error.

- No safeguards/logging/backups: There’s no dry-run, no check for existing targets, and no recovery plan — so mistakes are permanent unless you have backups.

8) How to prove it yourself in one minute (safe)

- Create a new folder

C:\temp\rename-test. - Put these files in it:

test.exe,notes.txt,doc.doc. - Run the dry-run batch I gave above (it only

echos the ren commands). - Observe the planned renames:

test.exe→test.exe.txt(broken),notes.txt→notes.txt.txt(wrong),doc.doc→doc.doc.txt(maybe desired). - If you want to test actual rename, copy the folder first (backup), then run the real script in the copy and observe the destructive changes.

Thank you for testing bitdefender.

Would ESET and detect the script too or they would flag it as safe.

You may also like...

-

Serious Discussion Data Collection Core Principles (Security Software)

- Started by Trident

- Replies: 7

-

Serious Discussion Deep Research: Bitdefender Protection Technologies

- Started by Trident

- Replies: 6

-

Serious Discussion Deep Research: Trend Micro VSAPI and ATSE Release History and Modus Operandi

- Started by Trident

- Replies: 7

-

-

AV-TEST 26 Security Solutions Undergo an Advanced Threat Protection Test Against Ransomware

- Started by Gandalf_The_Grey

- Replies: 5